Al Roker never had a heart attack. He doesn’t have hypertension. But if you watched a recent deepfake video of him that spread across Facebook, you might think otherwise.

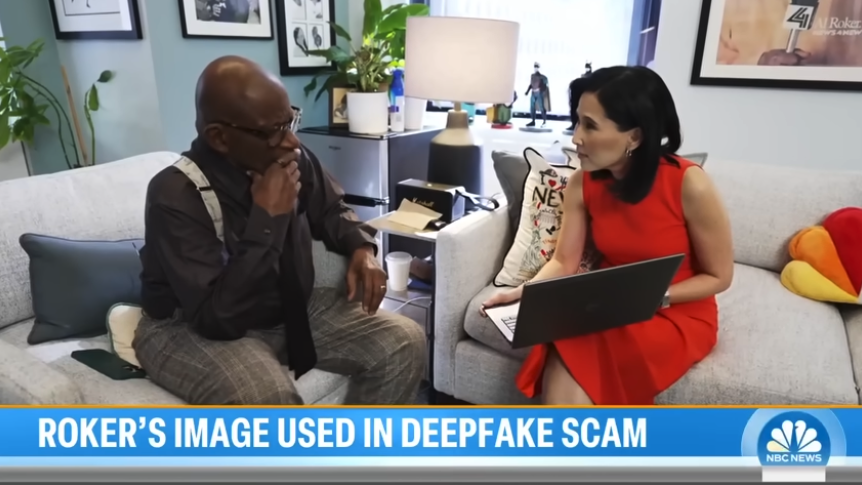

In a recent segment on NBC’s TODAY, Roker revealed that a fake AI-generated video was using his image and voice to promote a bogus hypertension cure—claiming, falsely, that he had suffered “a couple of heart attacks.”

“A friend of mine sent me a link and said, ‘Is this real?'” Roker told investigative correspondent Vicky Nguyen. “And I clicked on it, and all of a sudden, I see and hear myself talking about having a couple of heart attacks. I don’t have hypertension!”

The fabricated clip looked and sounded convincing enough to fool friends and family—including some of Roker’s celebrity peers. “It looks like me! I mean, I can tell that it’s not me, but to the casual viewer, Al Roker’s touting this hypertension cure… I’ve had some celebrity friends call because their parents got taken in by it.”

While Meta quickly removed the video from Facebook after being contacted by TODAY, the damage was done. The incident highlights a growing concern in the digital age: how easy it is to create—and believe—convincing deepfakes.

“We used to say, ‘Seeing is believing.’ Well, that’s kind of out the window now,” Roker said.

From Al Roker to Taylor Swift: A New Era of Scams

Al Roker isn’t the first public figure to be targeted by deepfake scams. Taylor Swift was recently featured in an AI-generated video promoting fake bakeware sales. Tom Hanks has spoken out about a fake dental plan ad that used his image without permission. Oprah, Brad Pitt, and others have faced similar exploitation.

These scams don’t just confuse viewers—they can defraud them. Criminals use the trust people place in familiar faces to promote fake products, lure them into shady investments, or steal their personal information.

“It’s frightening,” Roker told his co-anchors Craig Melvin and Dylan Dreyer. Craig added: “What’s scary is that if this is where the technology is now, then five years from now…”

Nguyen demonstrated just how simple it is to create a fake using free online tools, and brought in BrandShield CEO Yoav Keren to underscore the point: “I think this is becoming one of the biggest problems worldwide online,” Keren said. “I don’t think that the average consumer understands…and you’re starting to see more of these videos out there.”

Why Deepfakes Work—and Why They’re Dangerous

According to McAfee’s State of the Scamiverse report, the average American sees 2.6 deepfake videos per day, with Gen Z seeing up to 3.5 daily. These scams are designed to be believable—because the technology makes it possible to copy someone’s voice, mannerisms, and expressions with frightening accuracy.

And it doesn’t just affect celebrities:

- Scammers have faked CEOs to authorize fraudulent wire transfers.

- They’ve impersonated family members in crisis to steal money.

- They’ve conducted fake job interviews to harvest personal data.

How to Protect Yourself from Deepfake Scams

While the technology behind deepfakes is advancing, there are still ways to spot—and stop—them:

- Watch for odd facial expressions, stiff movements, or lips out of sync with speech.

- Listen for robotic audio, missing pauses, or unnatural pacing.

- Look for lighting that seems inconsistent or poorly rendered.

- Verify shocking claims through trusted sources—especially if they involve money or health advice.

And most importantly, be skeptical of celebrity endorsements on social media. If it seems out of character or too good to be true, it probably is.

How McAfee’s AI Tools Can Help

McAfee’s Deepfake Detector, powered by AMD’s Neural Processing Unit (NPU) in the new Ryzen™ AI 300 Series processors, identifies manipulated audio and video in real time—giving users a critical edge in spotting fakes.

This technology runs locally on your device for faster, private detection—and peace of mind.

Al Roker’s experience shows just how personal—and persuasive—deepfake scams have become. They blur the line between truth and fiction, targeting your trust in the people you admire.

With McAfee, you can fight back.